There are numerous reasons organisations are interested in data sharing. However, deduplication is one of the most common reasons. This is the first part of a short series of posts looking at different options for data sharing especially for deduplication purposes.

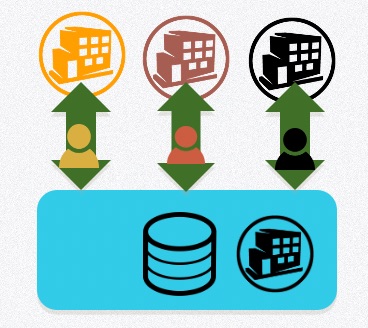

Centralising the data is the most common approach to deduplication. Essentially, the thought is that if we put all the data together in one place, we can figure out the duplicates. This works if there are standards agreeing what data will be collected and its structure.

Let’s assume the purpose is agreed (deduplication) and the organisations are legally permitted to collect and share data. Additionally, we’ll assume standards are agreed and the organisations in question trust each other enough. Yes, those are big assumptions, but let’s go with it.

The centralised approach can be thought about as a common data layer. And it is becoming more common. When working in a consortium is a prime agency, who then sub-grants parts of the job/contract to other agencies. The prime is responsible to the donor for reporting and implementation. Therefore, the centralised data layer approach fits neatly into this model. The prime provides the common data layer themselves or contracts a supplier to do for them. We see this model when organisations operate under similar legal jurisdictions.

The other model we are seeing is a donor or a powerful organisation telling others to use their system and effectively being the centralised data layer. We see this model when organisations operate under significantly different legal jurisdictions (e.g. UN vs NGO).

For deduplication, the data put into the data layer is determined either by the group or by the data layer ‘owner’. It can be:

- All the personally identifiable information (PII)

- A select group of PII data fields

- A hash of either 1 or 2 above

- A biometric

The deduplication process is relatively straightforward as it is ‘run’ in the data layer. The standards should articulate what process will be followed if a duplicate is followed. It’s worth pointing out, no deduplication process is perfect, there are always errors which must be planned for.

So we have a central dataset in a data layer. The political question arising immediately is who ‘owns’ or ‘controls’ the data layer. In the two examples above it’s the prime agency or the powerful agency. In addition to owning the data, they effectively carry the liability as well. However, this also means they can revoke any agency’s access to the data at any point. This can be beneficial if there is a security breach, but it also happens during displays of power. And yes, this happens in the humanitarian space regularly. While we are on access, the person about whom the data is has no say and no access to the data about them ever.

The organisation holding the data layer is powerful. They often will decide on the technology to be used. They justify various actions by reminding other of the ‘service’ they are providing and the liability they carry.

This model is based on a dated operating model and does not utilise technology readily available. It reinforces the existing power dynamics between organisations. And keeps the ‘beneficiary’ on the sidelines.

0 Comments

Trackbacks/Pingbacks